Pictorial Input Leads to Dynamic Text Style Generation

Pictorial Input Leads to Dynamic Text Style Generation

The concept of deepfakes scares a lot of people. Most of the time, when these AI-powered projects gain widespread attention, it’s because they were used unethically.

From identity theft to fake news, we’ve seen all the bad side of this tech. But Facebook’s one company that wants to use it for future innovations instead.

What Is TextStyleBrush?

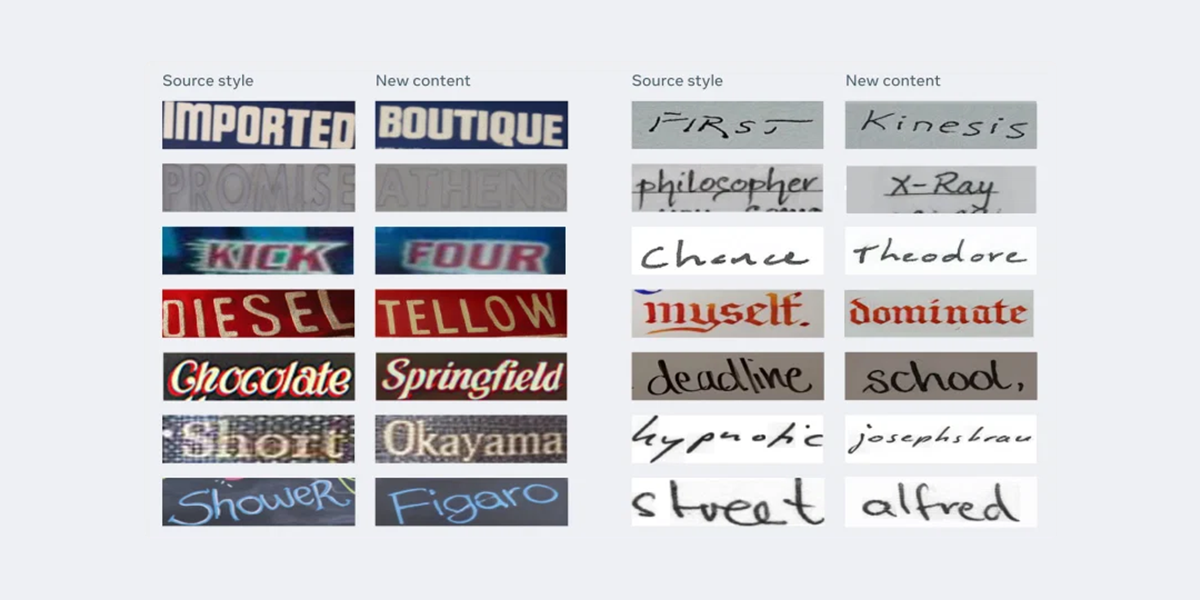

Researchers over at Facebook have introduced their new self-supervised AI model, “TextStyleBrush” onNewsroom . Using just one image of a single word, it can edit and replace the text while copying the font or visual style that it was written in.

The tool works on both handwritten text and text in real-word scenes. It’s an impressive feat, because—as Facebook itself notes—the AI has to understand “unlimited” text styles.

Not only is there all sorts of different kinds of typography and calligraphy out there, but there’s so many stylistic details that need to be taken into consideration. What if someone writes on a slant or a curve? Or the surface that the text is on makes it hard to read? What about background clutter or image noise?

Facebook explained the TextStyleBrush model works similar to the way style brush tools work in word processors, but for text aesthetics in images:

It surpasses state-of-the-art accuracy in both automated tests and user studies for any type of text. (…) We take a more holistic training approach and disentangle the content of a text image from all aspects of its appearance of the entire word box. The representation of the overall appearance can then be applied as one-shot-transfer without retraining on the novel source style samples.

If you want a more technical breakdown of TextStyleBrush’s functions, you can read the full-length article on Facebook’sAI blog .

The Google Translate mobile app has a function similar to Facebook’s new AI in that it also replaces text, but it aims to solve a different problem. Just like its name would suggest, it instead translates text into a language of your choosing.

Related: Google Translate Mobile Features You Must Know

Faces Aren’t the Only Thing That Can Be Deepfaked

We’re hearing about this research because Facebook hopes that by publishing it, there will be even more research and discussion on deepfake text attacks.

The tech world frequently talks about how concerning deepfake faces are, but doesn’t discuss often enough how it’s possible now to also use tech to create convincing fakes of handwriting, signage, etc.

If AI researchers and practitioners can get ahead of bad actors, Facebook says, then it’ll be easier to detect when deepfakes are used maliciously, and to build systems to combat them.

Also read:

- [New] Social Media Marketing Elevating Public Health Dialogue

- [Updated] InteractiveMix for All Systems

- 2024 Approved 20 Complimentary LUTs Available for DJI Mini/Air 2 Users

- Exploring What Facebook Chooses to Show You

- Facebook Forgotten? Here Are 10 Reasons to Disconnect and Refocus Your Life

- Facebook Portal: Which Model Should You Invest In?

- Guide to Unlinking on Facebook Pictures

- Hassle-Free Ways to Remove FRP Lock on Huawei Phones with/without a PC

- In 2024, How to Fix Locked Apple ID from iPhone 12 Pro Max

- New Free 3D Animation Apps for Android and iPhone A Comprehensive Guide for 2024

- Optimal YouTube Video Formats A Comprehensive Guide for 2024

- Protect & Surf Smart - Identifying 9 Security Alarms Online

- Room 10 Revealed: Tips for Texting Triumph

- Solve Your iPhone VPN Connection Problem with These 7 Key Fixes Today!

- Step-by-Step Fix: Stop Warcraft 3 Reforged From Crashing Your PC

- The 10 Best Free Apps for Managing Your Social Accounts

- The Digital Duel: Is TikTok Ending Facebook’s Dominance?

- Title: Pictorial Input Leads to Dynamic Text Style Generation

- Author: Michael

- Created at : 2024-10-31 17:35:33

- Updated at : 2024-11-01 16:02:47

- Link: https://facebook.techidaily.com/pictorial-input-leads-to-dynamic-text-style-generation/

- License: This work is licensed under CC BY-NC-SA 4.0.